(.NET) App Security - What every dev needs to know

by Guest Blogger: Sebastian Holst, on Oct 19, 2017 3:04:00 AM

Let me begin by thanking Mobilize.Net for the opportunity to contribute as a guest blogger. I'm going to assume that this crowd understands all too well the costs and the risks that come with holding on to legacy code. It should come as no surprise then that holding on to legacy (outdated) risk management practices comes with it's own penalties and expense.

Upgrading to a modern development environment does

not automatically upgrade your application risk management practices.

You don't want to be naive, but you can't affort to be paranoid either

Cybercrime waves and digital espionage are all too real - as are the myriad of new and expanding regulations and legislation targeting development and IT. Development organizations must find ways to prevent the former and comply with the latter - all while avoiding over-investing, compromising app quality or losing dev velocity. Said in another way, over-engineering security can be just as deadly as exposing vulnerabilities.

The following overview of development risk with some guidance on how to manage those risks mixed in is intended to offer a non-hysterical approach to application risk management. Overview or not, the topic is a large one that can't be tackled in a single shot, so I've tried to include helpful links throughout. Please look for opportunities to download additional information, attend webinars, etc.

Visual Studio does not discriminate (and that's problematic)

Microsoft is committed to delivering the most powerful modern dev and DevOps experiences possible – and there’s a good argument to be made that they are succeeding. Code libraries, testing and debugging tools, APIs and the growing suite of DevOps tools and technologies offer development unprecedented insight and control over their code throughout an application's life cycle.

Yet, the knife cuts both ways. In the hands of a “bad actor,” the very same development arsenal used to accelerate development, monitor usage, diagnose and update production software, scan for vulnerabilities, etc. can turn our most trusted applications (and the information they process) against us. …and, given the chance, bad actors have shown that they are ready to do exactly that.

Hackers don’t reveal their tricks (but here are a few)

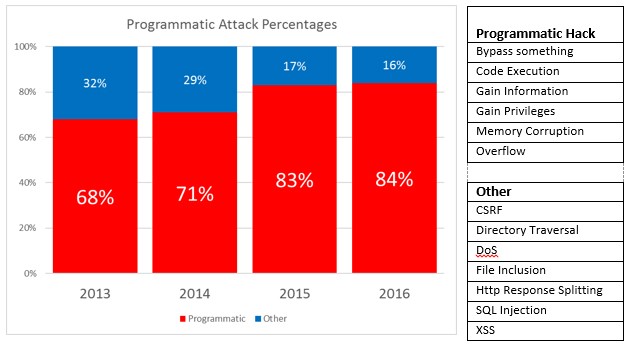

There is plenty of evidence that shows how hackers are quick to use our own tools against us. In How Patreon got hacked, Detectify builds a strong case that a debugger was used to hack into a public site’s PII. This is no outlier. In an earlier post, Like magicians, hackers do not reveal their tricks – but we will, I drilled into the vulnerabilities published by The National Institute of Standards and Technology (NIST). NIST groups vulnerabilities into functional categories like "Privilege Escalation" or "SQL injection". As the chart below illustrates, six of the thirteen vulnerability categories that NIST tracked grew from 68% to 84% of the total number of reported vulnerabilities over a 4 year period.

While the categories themselves didn't have a whole lot in common (that’s why they are different categories), they did share one important characteristic – hackers almost certainly used developer tools to probe target applications as part of the "vulnerability discovery" process.

Development tools do for hackers what lock picking tools do for burglars.

The current cybercrime wave is our fault (we are victims of our own collective success)

If Willie Sutton were alive today, he wouldn’t use guns to rob banks, he’d be a hacker – because that’s where the money is.

The rise of cybercrime and nation-state cyber-attacks is a direct byproduct of our (collective) success in building the digital economy, e-government, social networks, and embedding software in ever-tinier devices (IoT).

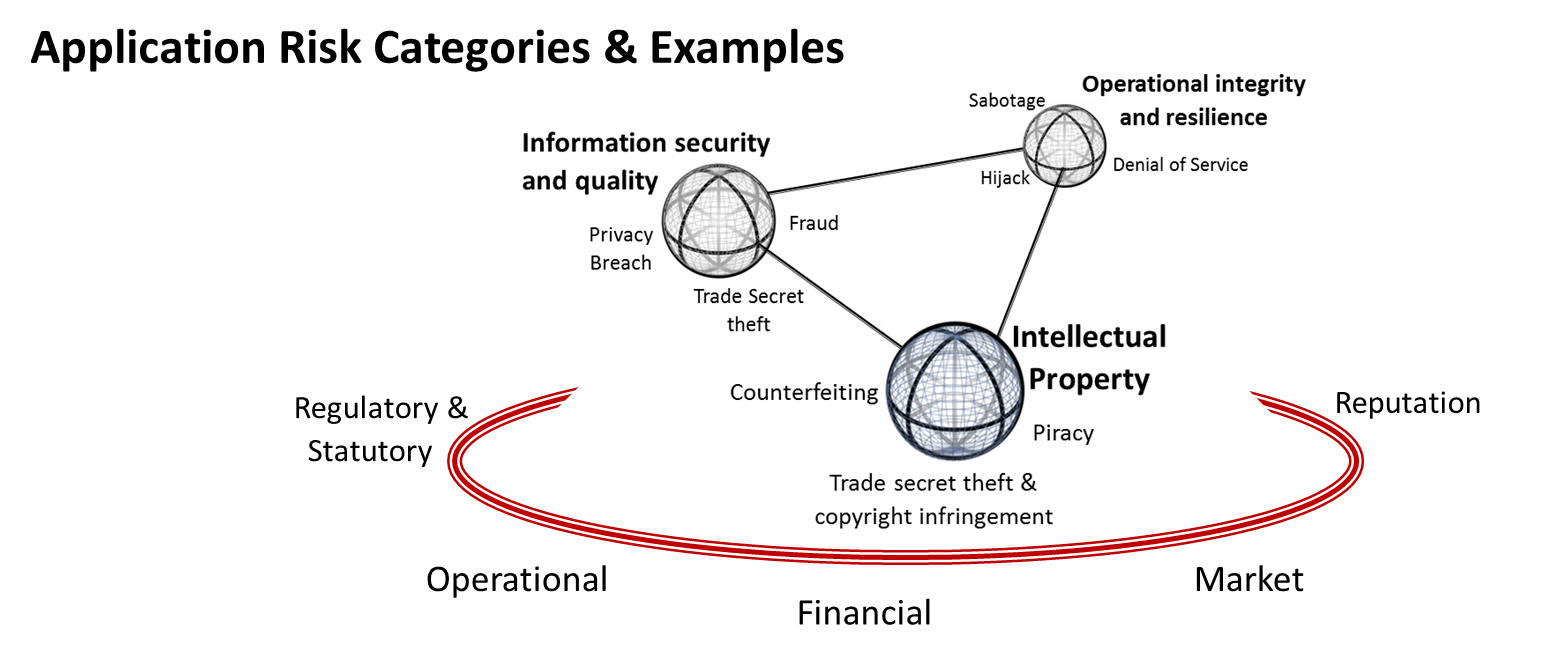

Financial losses, privacy breaches, intellectual property theft, operational disruption, counterfeiting … the list of potential risks stemming from application development seems to know no end.

With great success comes great responsibility (and we, the development community, are increasingly – and justifiably - being held to higher and higher standards)

In addition to any actual damage resulting from a breach, failure to meet regulatory/compliance obligations can result in significant punitive fines and civil lawsuits (as will failure to follow incident reporting requirements). These then quickly snowball into lost sales, brand damage, market devaluation, increased cost of capital, lower retention rates, ...

Regulations are not new – so what’s changing?

Stinging penalties. In the three examples I'll cite below, companies can expect to lose anywhere from $5M to €20M (per incident) regardless of the size of the company (and many times more for larger enterprises.)

A commitment to enforcement. Regulators, auditors and law enforcement are investing in finding and punishing violators.

Unprecedented obligations that target development specifically. Most requirements target operations and IT, but this is changing as the role of development in hardening applications becomes more widely understood.

You can’t have secure data without securing the applications that process and manage that data too. Markets, customers, regulators and law enforcement are quickly recognizing this dependency.

Three Regulations You Can't Ignore

The following three examples span jurisdictions and scope, but each share the three qualities outlined above, e.g. stinging penalties, a promise of strict enforcement, and new development-centric obligations.

1. Defend Trade Secrets Act (DTSA) (complimented by a sister EU Directive)

General Scope: Intellectual Property (IP)

Jurisdiction: US and EU

Passed into law in May, 2016

What's it for? The DTSA consolidates trade secret protection at the federal level for the first time (and correspondingly, the EU Directive has a similar objective across the EU).

Penalties: DTSA ups the potential penalties to the greater of $5M USD or 3X actual damages (with an optional doubling for “willful or malicious misappropriation).”

Obligations: This legislation is perhaps most notable in that protection under this new law might not be available unless the victim can show that the misappropriated intellectual property had been consistently treated/protected as a secret from cradle to grave.

Further, reverse-engineering is explicitly excluded as an act of misappropriation. In other words, if access to your IP was derived (in part) through reverse-engineering (a straightforward process for .NET, Java, and Android apps), that IP would most likely not be treated as secret – and, by extension, not protected under the DTSA.

What you need to know: In order to ensure protection under the DTSA, IP owners must be able to demonstrate that they have treated the IP inside their applications – and the data that flows through those applications – as secrets throughout the life of that IP. If reverse engineering is literally a “get out of jail free” card for trade secret thieves – reasonable controls must be in place to prevent reverse engineering.

For a more thorough treatment of the DTSA, here’s a 2016 SD Times article Guest View: Coders are from Mars, courts are from Venus.

2. Executive Order 13800 –“Strengthening the Cybersecurity of Federal Networks and Critical Infrastructure.”

General Scope: Security and operational reliance

Jurisdiction: US

Signed: May, 2017 - security policies are in effect 1/1/2018

What's it for? In order to do business with the Federal government, Executive Order 13800 requires specific controls be in place to protect any/all unclassified information.

Executive Order 13800 states “Effective immediately, each agency head shall use The Framework for Improving Critical Infrastructure Cybersecurity… to manage the agency's cybersecurity risk.” As with the DTSA referenced above, required development practices and controls are included by reference.

Obligations: “Adequate Security” levels must be implemented “as soon as practical, but not later than December 31, 2017.”

This begs the question – what is “adequate?” It's a big question that we couldn't begin to answer here, but a great example of a development-specific requirement can be found in NIST Special Publication 800-171 section 3.1 Access Control, subsection 3.2.1. Development organizations are directed to

“Limit information system access to the types of transactions and functions that authorized users are permitted to execute.” This control goes beyond embedded application functionality like “change address” or “add user.”

“Use of privileged utility programs” (like debuggers) and “Access control to program source code” (like reverse engineering tools) are explicitly identified.

What you need to know: In order to meet the development requirements of Executive Order 13800 (or risk losing federal contracts), development organizations need to demonstrate that they have reasonable controls in place to prevent unauthorized use of debuggers and reverse engineering tools on their production applications.

3. General Data Protection Regulation (GDPR)

General Scope: Data Privacy

Jurisdiction: European Union

Fully enforced beginning May, 2018.

What's it for? The GDPR is a regulation by which the EU intend to strengthen and unify data protection for all EU citizens.

Penalties: Penalties can go as high as $ €20M euros or 4% of a company’s revenue – whichever is higher.

Obligations: The reach of the GDPR extends across the globe protecting any EU citizen’s Personally Identifiable Information (PII) regardless of where their data is collected or stored.

GDPR is the first to distinguish between “Controllers” (who own and determine the use of PII) and “Processors” (who actually process, manage, etc. the PII)) and assign responsibilities and penalties to both parties.

HOWEVER – one of the most under reported conditions of the GDPR is its shift from requiring “reasonable” or “adequate” efforts to requiring “state of the art.” Industry norms (reasonable) have been replaced by computing best practices as the reference standard.

What you need to know: There is no more safety in numbers. Reasonable precautions would excuse you if no one in your industry (say air conditioning repair) used two-factor authentication to access client financial information. State of the art (what financial institutions use for example) would result in every air conditioning repair organization being liable for not using two factor authentication.

The GDPR has many important precedents for development organizations to consider. Here are three links that may be of interest:

- GDPR liability: software development and the new law

- App dev & the GDPR: three tenets for effective compliance

- PreEmptive Solutions GDPR Overview

A practical GRC road map for development (but there’s no avoiding the fact that it’s still your journey)

Five (not so simple) steps to effective application risk management

I’ll wrap up this very long post the way I began – with the truism that you can’t effectively manage risk without first assessing what risks are material (worth managing). With that in mind, I'd like to offer up the following high level workflow with some supporting links:

- Identify the “what if’s” and estimate the actual cost/pain that would follow. Someone tampers with your app - what's the worst that could happen? How likely is it to happen?

- Prioritize risks that are unacceptably high according to your organization's appetite for risk. The objective isn't to eliminate risk (not possible) - only to reduce risk to an acceptable level. There's really a diminishing return on investment once you hit that point.

- Benchmark your organization against your peers (industry, size, jurisdiction, etc...)** See the longer note below for an opportunity to benchmark your practices against the responses of 400 other development organizations.

- Select and implement controls to mitigate (reduce) risks to “acceptable” levels. The cure can't be worse than the disease - ease of use, quality, vendor viability, potential impact on your software, etc. each have the potential to drive up the cost/risk of implementing the control.

- Validate and reset as appropriate. This is a continuous process whose cadence will be set by your specific risk patterns and appetites - but it is always continuous.

Have I over-simplified? Certainly yes. For a more detailed application risk assessment post, check out The Six Degrees of Application Risk

** Benchmarking is an important topic that I didn't get to address at all here. Understanding how your peers (by industry, by target market, by development team size) perceive application risk and which controls they use can be invaluable in establishing both "reasonable" and "state of the art" standards. Review the Application Risk Management benchmark that consolidates the risk management practices of 400 development organizations and - if you're interested in benchmarking your own practices against your peers - contact me and I will send you a link. It only takes a few minutes and we do the comparison for you (there are no fees - it's a complimentary service).

Procrastination is a policy - but one that's hard to defend

Not getting around to making a policy” IS a policy - and there' no regulator or auditor that signs off on that.

While it may be tempting to put off integrating risk management into your development policies and practices, do not lose sight of the fact that once regulators and auditors are involved – “not getting around to making a policy” IS a policy - and there's no regulator or auditor that signs off on that.

Comments? Want to learn more? – I’d love to hear from you at sebastian@preemptive.com

...and thanks once again to the fantastic folks at Mobilize.Net