Mediocre programming, carburetors, and ChatGPT

by John Browne, on May 2, 2023 1:30:17 PM

Many years ago I was an auto mechanic. This was in the days before digital motor electronics, electronic fuel injection, or other high tech add-ons that we take for granted in new cars today. Our shop didn’t cater to folks with newer cars—we catered mostly to poor folks who had older cars that needed just enough repair to get them to work on Wednesday.

Therefore, what I got used to was old school stuff like carburetors, mechanical ignition systems (points and plugs!), nuts and bolts and back breaking labor. We didn’t have OBD readers cause cars didn’t have OBD.

This was an interval after I decided I didn’t want to be a microbiologist but before I discovered software.

Stick around for why this is interesting (at least to me).

Last week I mused about whether generative AI could replace our Visual Basic Upgrade Companion as a (relatively free) tool to modernize/migrate VB6 to C#. Apparently this touched a nerve based on the responses I got. (TL;DR: short programs maybe, long programs nope.)

William, one of my smarter colleagues, did some tests with simple VB6 programs and ChatGPT and one thing stuck in my mind about the conversion (to .NET and C#) which for the most part was pretty good—note it was a very short and simple program. And what troubled me was that the conversion didn’t upgrade the database access from ADODB to ADO.NET.

How come?

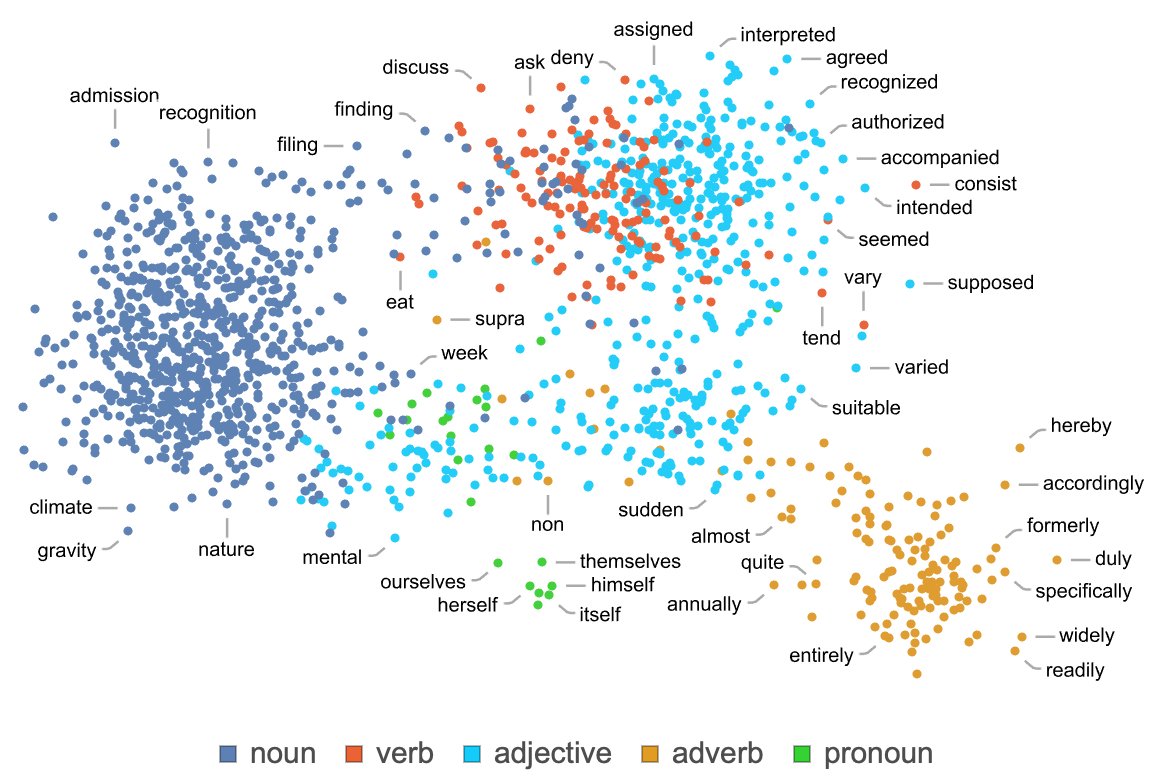

Well, today I went down a rabbit hole that started with this which led to this. Cal Newport is a computer scientist at Georgetown who has a pretty nice explainer in that New Yorker article about how generative AI works under the hood. If you read my post last week and followed the link to the explainer by Stephen Wolfram, you will certainly find this one shorter and easier to follow if you lack, say, a PhD in linear algebra.

Let’s jump to a super over-simplification of how generative AI works under the hood. ChatGPT is creating each word based on a complex calculation of the probability of what the most likely next word will be. And by “complex” I mean billions and billions of weights, calculations, and general math voodoo to create something that appears to be written by a human. In other words, Turing Test positive.

This just in: people are using ChatGPT instead of a therapist to tell their troubles to. Note: this is probably not a good idea.

Ok, back to large learning models. The key word here is “large.” In the case of ChatGPT it basically read the internet, including every digital book, to get a large learning model. That’s a lot of words. Let’s suppose the model was constrained to only content written in English. Could ChatGPT create Spanish sentences? Or Mandarin?

No. It can only work with what it was fed. IOW you are what you eat, even if you’re a LLM.

Let’s talk cars

Ok, I was a mechanic in the 70s. I knew a lot about stuff like how carburetors and mechanical ignition systems worked. Suppose I was the complete source for a LLM aimed at auto repair. You suck all my (obsolete) knowledge about, say 1969 VW micro-buses into a LLM AI system. Now ask it to diagnose why my 2021 Audi Q5 won’t start. How’s that going to work?

Not well: it won’t have a clue. Worse, it will possibly say something like “check the points gap.” 2021 Audi doesn’t have points, dude. You’ve made something up. My input to the LLM was too restrictive to be useful in this situation. The LLM can regurgitate what it knows, but it’s not going to take my 1970s Car Guys knowledge base and extrapolate digital motor electronics or electronic fuel injection systems.

One of the challenges being documented is that ChatGPT will make stuff up. But it won't tell you it made it up. You have to figure that out on your own.

What’s this got to do with programming?

Over my (lengthy) career, I’ve worked with a lot of developers and looked at lots of code. Here at Mobilize.Net we probably see more lines of real-world application source code than anyone else in the world. I’m talking about millions and millions of lines of code: VB6, ASP.NET, C#, VB.NET, PowerBuilder, and more. When we do migration projects for customers (which are normally 500kloc and larger), we get into the code literally line by line. We see the good, the bad, and the butt-ugly.

And frankly, a lot of it is really awful.

Legacy code—stuff 10, 15, even 20+ years old—is never going to look like what they taught you at university. It’s not elegant, tight, clean, well-commented, beautifully designed software. It might have started off with that intention, but a quick fix here, a fast feature implementation there, some debugging hacks to meet a deadline all done over and over through the years and you get a mess.

Millions and millions of lines of industrial-strength mess are still running out in the real world. It's not that developers don't want to write good code, or don't know how. It's more about the realities of working with limited resources, bad time estimates, trying to create something from nothing and the inherent issues with software engineering which continue to produce failure rates of around 70% for new development projects.

Now let’s take our LLM and feed it all the source code we can find. All the code with the hacks, the mistakes, the dead code, the bad designs and everything else and then ask it to write some new code. What is it going to base its token-probability engine on? Where did ChatGPT get its very large data set of, say, VB6 code to build a model on?

Frankly, I don't know. I can only guess. But I'm sure that it's not proprietary customer code that has never lived in a public github repo. Those sources are on old air gapped Windows XP boxes under someone's desk, on a VM running in a private cloud, or maybe in a on-prem TFS instance. The apps themselves may be running today on a Windows 10 box, but the VB6 source code for sure is tucked away somewhere.

However, the Internet has lots and lots of VB6 sample code, because in the 90s VB was the hottest programming language in the world with an enormous ecosystem built around it for training, support, and 3rd party components. But all that source code--which I wildly presume is the basis for any LLMs that will try to modernize VB6 to C#--would be old. Very very old.

Which is where ADO.DB vs ADO.NET comes in. Remember, officially VB6 "ended" with the release of .NET 1.0. That's why Mobilize.Net even exists: Microsoft got Artinsoft (our original name) to build the Visual Basic Upgrade Wizard and included it for free in Visual Studio .NET to help developers move from VB6 to VB.NET. So all that old VB sample code came BEFORE ADO.NET was a thing. Some, but not most, of VB6 publically available code won't mention ADO.NET. ChatGPT will see "hmm, ADODB is much more 'popular' than ADO.NET, I'll use that."

Again, pure speculation.

Let's sum this up

Despite all the hype about generative AI, I think the counter-argument is pretty valid. And I think my ADO.DB example kind of shows it. Jeff Maurer explains it really well here in this article (it's worth the visit for the attempts at creating an image of "ham with googly eyes fighting in Vietnam". He sums it up really well:

For all of AI’s strengths, it has a clear weakness: It can’t create anything truly new. That’s why it struggled to generate a picture of Private Honeybaked deep in the shit at Khe Sanh: That photo isn’t floating around out there. AI is getting very good at making new iterations of old ideas, but it’s limited by the body of work that humans have already produced.

Another way to think about this is AI couldn't have invented itself. The concept of a neural network, invented by Geoffrey Hinton in 1972, was a radical new idea largely dismissed by other researchers. Yet neural networks are precisely what makes generative AI work. Could ChatGPT have come up with the idea of a neural network?

No.

Thus there will always be a need for creative minds to come up with something fresh, new, radical, unknown. Whether in science, technology, art, music, literature, or pop culture. Those explorers, those innovators will never be replaced by AI. Yes, I think there will be a revolution in "programming" because of generative AI, but I don't for one second think it will replace everyone. Which begs the question: who will it replace?